Spain’s government announced plans to hold social media executives accountable for illegal and hateful content online, part of sweeping new digital safety measures aimed at protecting children and curbing harmful material. The reforms also include a ban on children under 16 accessing major social platforms and strict age-verification systems. Why this matters now: rising global concern over mental health risks, online hate speech, AI-generated abuse, and weak moderation has pushed governments to act. These changes could reshape social media regulation in Europe and influence laws worldwide.

Redefining Accountability for Tech Firms

Spain’s Prime Minister, Pedro Sánchez, announced at the World Government Summit in Dubai that his administration will introduce a bill to criminalize algorithmic manipulation and the amplification of illegal content on social media platforms. Under the proposed law, senior executives at major tech companies could face personal liability if their platforms fail to address hate speech, violence, child abuse material, or other unlawful content swiftly and effectively.

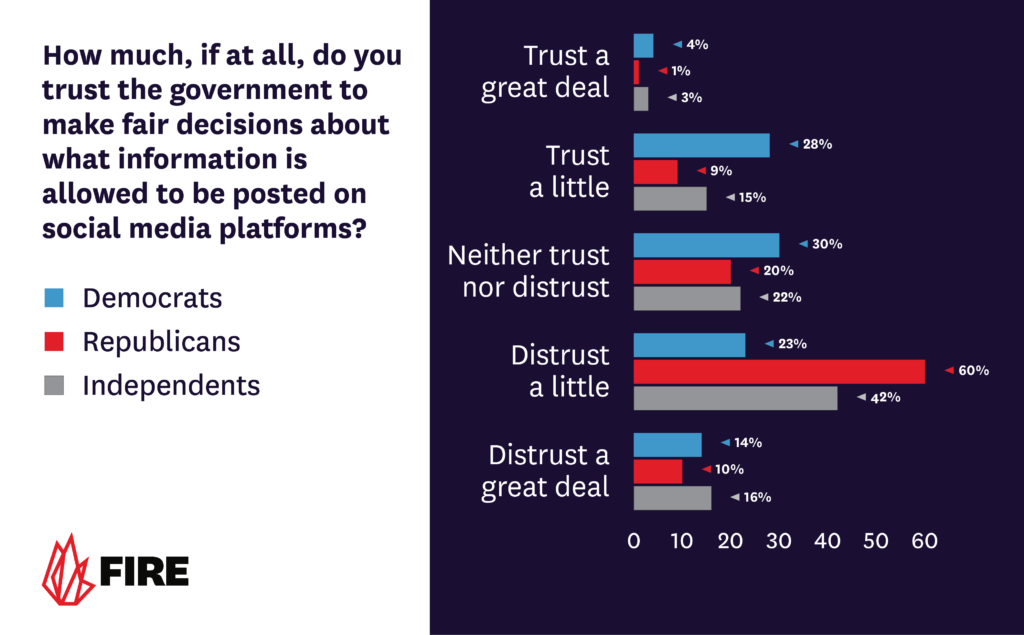

Policy makers argue that existing EU regulations — such as the Digital Services Act, which already requires content moderation and transparency from large platforms — do not go far enough in holding corporate leaders directly accountable. Unlike traditional content takedown rules, this initiative seeks to make executives legally responsible rather than relying solely on platform enforcement teams.

Critics, including free speech advocates, warn that the legislation could chill online expression and raise concerns over who decides what content is unlawful. However, supporters say it fills a regulatory gap, ensuring tech giants cannot ignore harmful material behind complex moderation algorithms.

Protecting Children: Social Media Ban and Age Checks

In a landmark step, Spain confirmed plans to ban children under the age of 16 from using social media platforms — a stricter stance than the 13-year minimum many platforms self-set today. This measure builds on similar policies in Australia, which already prohibit social media access for under-16s and have recently removed millions of teen accounts.

In addition to the under-16 ban, Spanish authorities are insisting on robust age-verification systems that go beyond simple checkbox confirmations. This directly addresses concerns that children routinely bypass weak protections to create accounts, exposing them to addiction risks, harmful behavioral content, cyberbullying, grooming, and exploitative materials.

Experts highlight that social media is increasingly recognized not just as a communication tool but also as a place where algorithmic feeds contribute to mental health stress, anxiety, and early addiction among youth. These factors are central to why Spain’s measures are framed as urgent public safety reforms.

Wider European and Global Context

Spain’s announcement isn’t happening in isolation. Across Europe, France, the United Kingdom, Italy, Greece, and other nations are debating or implementing tougher digital safety rules, particularly focused on minors. Some are already proposing age bans, limits on automated recommendation systems, or sanctions for unsafe algorithmic practices.

These national efforts are occurring within a broader EU legal framework shaped by the Digital Services Act (DSA), which requires platforms to manage illegal content and be transparent about content moderation processes. Even so, EU member states have discretion regarding age limits and enforcement intensity.

The broader movement reflects rising public awareness of online harms, AI-generated content risks, hate speech proliferation, and mental health challenges, particularly for younger users. Spain’s push also signals to other democracies that tech regulation is no longer optional — it’s central to consumer safety and digital governance.

Impact on Social Media Companies and Users

If enacted, the Spanish law will change how platforms like Meta (Facebook/Instagram), TikTok, X (formerly Twitter), Snapchat, and others operate in Europe. Companies may have to invest heavily in moderation technologies, legal compliance teams, and safety verification robust enough to satisfy new standards.

Executives would gain new incentives to quickly remove blatant illegal content, but debate persists over whether these changes could inadvertently encourage over-censorship of legitimate speech and debate. Civil liberties groups have signaled concerns that overly broad definitions of illegal material could be misused.

For everyday users, especially families and children, the reforms aim to offer greater protection and safer online environments — but may also limit how younger people engage with global social communities online.

Why This Still Matters

As social networks grow and AI systems generate ever more content, governments face mounting pressure to ensure that digital spaces are safe, accountable, and legally responsible. Spain’s initiative underscores a flight from passive regulation toward active accountability, a trend expected to influence future global digital policy debates.

With mounting public support and strong international interest, Spain’s bold approach could become a model for other nations, shaping the future of online governance and digital citizenship standards worldwide.

Subscribe to trusted news sites like USnewsSphere.com for continuous updates.