A Pennsylvania woman says she was wrongfully jailed because of AI-generated deepfake text, highlighting how artificial intelligence can dangerously infiltrate the criminal justice system — and why courts must rapidly adapt. In an era where AI tools can create highly convincing fake media — from doctored photos and videos to simulated text messages — reliance on unverified digital evidence can lead to devastating real-world consequences for innocent people. This alarming incident, now gaining national attention, exposes legal and technological weaknesses at the intersection of justice and artificial intelligence.

As deepfake technologies evolve, the risk of abuse — whether for harassment, misinformation, reputational harm, or even false criminal evidence — is increasing sharply. Experts warn that without stronger legal frameworks and more advanced detection tools, similar injustices could become commonplace. Today’s legal system, designed long before generative AI, is struggling to keep pace. This story not only explains what happened to one woman but also reveals broader warning signs for society as AI use explodes.

When AI Fiction Was Taken as Legal Fact

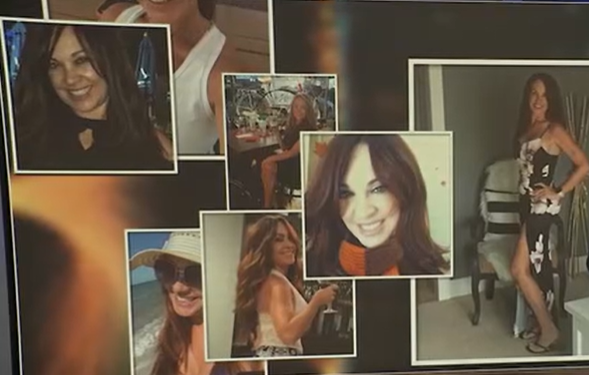

Melissa Sims, originally from Delaware County, Pennsylvania, says her life was upended when her then-boyfriend used AI tools to fabricate text messages he attributed to her. According to Sims, these AI-generated messages allegedly contained disparaging or inflammatory language, which law enforcement interpreted as proof she had violated the terms of her release from an earlier arrest. She spent two harrowing days in a Florida jail before the error was discovered.

The ordeal reportedly began in late 2024 after an altercation in which Sims’ boyfriend allegedly harmed himself during a disagreement. When police arrived at the scene, Sims was arrested on a battery charge — even as questions remained about the chaotic circumstances leading up to it. A judge ordered her to stay away from her partner and prohibited communication. Several months later, she says, her ex-boyfriend used an AI system to generate fake text messages that he claimed came from her. Because authorities did not verify the authenticity of these messages, Sims was again arrested for allegedly violating her bond conditions.

Legal Experts Warn of AI Evidence Risks

Judges and legal scholars are now sounding the alarm. Senior Judge Herbert Dixon, a member of the Council on Criminal Justice, told investigators that cases involving AI-generated evidence are becoming more frequent and more sophisticated, moving beyond simple fake audio recordings to deepfake video, manipulated images, and now AI-generated texts. In his view, the legal system’s standards for assessing digital evidence have not kept pace with this technology’s capabilities.

Professor Rob D’Ovidio, an expert in AI forensics, explains that detection tools are struggling to keep up. In one demonstrative test, images created by AI were analyzed by leading detection software, with wildly inconsistent results — some showing only a tiny chance the image was fake and others labeling it far more likely to be synthetic. These inconsistent detection outcomes illustrate a serious challenge: if even expert tools can’t reliably identify deepfake content, how can courts and prosecutors be expected to do so under real-world pressure?

Why This Case Matters for Courts and Public Trust

The Sims case is far from isolated. Deepfake technology has been implicated in major scandals worldwide, from manipulated videos of global leaders spreading disinformation to sexually exploitative images created without consent. In recent years, deepfake content has even been used in social movements to deceive and divide public opinion. This growing body of AI-manipulated content has heightened public awareness that what you see or read online can no longer be assumed to be real without verification.

Perhaps most alarming is the fact that law enforcement procedures remain rooted in a default assumption that evidence presented by “screenshots” or digital records is trustworthy unless proven fake. AI experts caution that this reasoning must be reversed — that evidence should be treated with skepticism until verified, not validated simply because it appears plausible. Without such a shift, deepfake misuse could easily become a tool for framing innocent individuals or undermining justice.

How the Law Is Starting to Respond

Though troubling, the crisis sparked by Sims’ case has already produced some progress. In 2025, Pennsylvania Governor Josh Shapiro signed a new digital forgery law that classifies harmful AI deepfakes — particularly those used to exploit or scam individuals — as felonies. This landmark move reflects increasing recognition by lawmakers that legal systems need strong definitions and consequences for AI misuse.

However, legal reforms are uneven across states and countries. In some regions, deepfake sexual exploitation and privacy violations have prompted criminal penalties and tighter regulations, especially where minors are targeted. Other jurisdictions are still debating how to balance free speech and technological innovation against consumer safety and justice. Critics say that without national or global standards, courts will continue to encounter cases where digital deception goes unchecked.

Calls for Stronger AI Verification Standards

Advocates for improved AI regulation argue that current forensic tools simply cannot handle the sophistication of modern models. They suggest mandatory digital authentication standards — such as cryptographic watermarking of legitimate content — so that genuine texts, photos, or videos can be distinguished from AI fabrications. Without such safeguards, even judges and prosecutors may inadvertently rely on bogus evidence.

Others emphasize the need for greater judicial training. Law schools and legal institutions are increasingly teaching digital forensics and AI literacy to future lawyers and judges. These educational initiatives aim to improve the justice system’s ability to scrutinize evidence with a critical eye and avoid wrongful detentions or convictions based on manipulated material. Experts also want lawmakers to fund public research into better detection tools that evolve alongside AI generation capabilities.

The Human Toll — And a Cautionary Lesson

For Sims, eight months of legal anguish ended when prosecutors dropped the bond violation charge against her, and she was ultimately acquitted of the initial battery charge she had faced. Despite the relief of vindication, she says her story is a cautionary tale for millions of Americans and people worldwide. “If this can happen to me,” she warns, “it can happen to anyone.”

Her ordeal has fueled her advocacy for stronger legal standards and increased public understanding of AI risks. As deepfake technology becomes more accessible and its use more widespread, the challenges it poses to individuals and institutions will only grow. Sims is now pushing for clearer laws outlining how digital evidence must be authenticated before it can be used in criminal cases — an effort that could protect future victims of AI deception.

Why This Matters to Everyone

The case of AI-generated deepfake text leading to a wrongful arrest is more than just a bizarre headline — it’s a wake-up call. As artificial intelligence continues to evolve, deepfake technology is not just a tool for pranks or misinformation — it has become a serious legal and ethical threat to personal freedom, due process, and public trust in digital information.

To protect innocent people and preserve credibility in the justice system, courts, lawmakers, and technology companies must act now. Stronger legal frameworks, robust detection tools, and greater public awareness are essential in an age where seeing isn’t believing, and reading isn’t necessarily reality.

Subscribe to trusted news sites like USnewsSphere.com for continuous updates.