Scaling Neuromorphic Computing: Mimicking the Human Brain for Advanced AI

Neuromorphic computing is revolutionizing the field of artificial intelligence (AI) by mimicking the intricate workings of the human brain. This cutting-edge approach aims to surpass the limitations of traditional computing systems, offering a more energy-efficient and intelligent solution for AI applications. As the demand for smarter, faster, and more efficient AI grows, neuromorphic computing is emerging as a game-changer in the tech landscape.

What is Neuromorphic Computing?

Neuromorphic computing refers to designing hardware and software systems that replicate the neural structures and functionality of the human brain. Unlike conventional processors that process data sequentially, neuromorphic systems process information in a parallel and event-driven manner—just like the human brain. This approach enables faster processing with lower power consumption, making it ideal for AI-powered applications such as robotics, autonomous systems, and real-time decision-making tasks.

Key Advancements in Neuromorphic Computing

Recent breakthroughs have led to the development of powerful neuromorphic chips that significantly enhance AI capabilities. Some of the most notable advancements include:

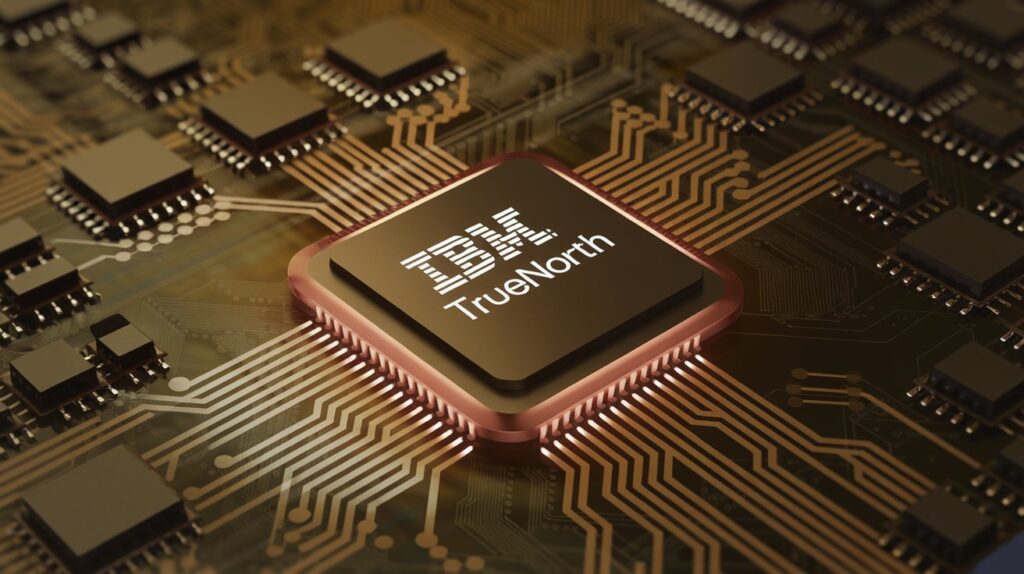

1. IBM’s TrueNorth Chip

IBM’s TrueNorth chip is a pioneering effort in neuromorphic computing. It features:

- 1 million programmable neurons and 256 million synapses.

- Event-driven computation to reduce power consumption.

- Parallel processing capabilities, enabling real-time AI applications.

2. Intel’s Loihi Chip

Intel’s Loihi chip is another groundbreaking development that introduces self-learning capabilities through spiking neural networks (SNNs). Key features include:

- Asynchronous processing to mimic brain-like activity.

- On-chip learning without requiring cloud-based data processing.

- Energy-efficient operation suitable for edge AI applications.

Benefits of Neuromorphic Computing for AI

The adoption of neuromorphic computing presents numerous benefits that are shaping the future of AI, including:

- Power Efficiency: Neuromorphic chips consume significantly less power compared to traditional CPUs and GPUs, making them ideal for battery-operated devices.

- Real-Time Processing: Their parallel processing architecture allows AI models to respond faster in critical applications such as healthcare and autonomous vehicles.

- Adaptive Learning: Neuromorphic systems can learn and adapt to new data inputs in real time, reducing the need for extensive retraining.

Applications of Neuromorphic Computing

Neuromorphic technology is already being deployed in various industries to enhance AI capabilities:

- Autonomous Vehicles: Enhancing sensor fusion and decision-making for safer self-driving cars.

- Healthcare: Assisting in brain-computer interfaces and diagnostic tools that require real-time processing.

- Smart Robotics: Enabling robots to learn and adapt to their surroundings autonomously.

- Cybersecurity: Detecting complex patterns of cyber threats through real-time anomaly detection.

Challenges Facing Neuromorphic Computing

Despite its promising future, neuromorphic computing faces several challenges, such as:

- Software Development: A lack of standardized frameworks makes it challenging to develop neuromorphic applications.

- Scalability Issues: Expanding neuromorphic architectures to commercial-scale applications requires significant research and development.

- Integration with Existing Systems: Combining neuromorphic chips with traditional AI infrastructures remains a hurdle for widespread adoption.

Future of Neuromorphic Computing

The future of neuromorphic computing is promising, with ongoing research focused on improving chip architectures, software ecosystems, and cross-domain applications. Companies like IBM, Intel, and Qualcomm are investing heavily in this technology, driving innovation and making it more accessible for commercial use.

Conclusion

Neuromorphic computing represents the next frontier in AI development, offering unparalleled efficiency, real-time adaptability, and scalability. As advancements continue to unfold, this technology will play a pivotal role in revolutionizing various industries, from healthcare to autonomous systems. [USnewsSphere.com / IBM]