Google Unveils 7th-Gen TPU ‘Ironwood’ – The 42.5 Exaflops Breakthrough Reshaping AI Cloud Power in 2025. This major announcement at Cloud Next 2025 introduces a new era in artificial intelligence infrastructure, designed to meet the exploding demands of real-time inference, cloud efficiency, and AI scalability in the United States and beyond.

What Is Google’s Ironwood TPU and Why Is It Important?

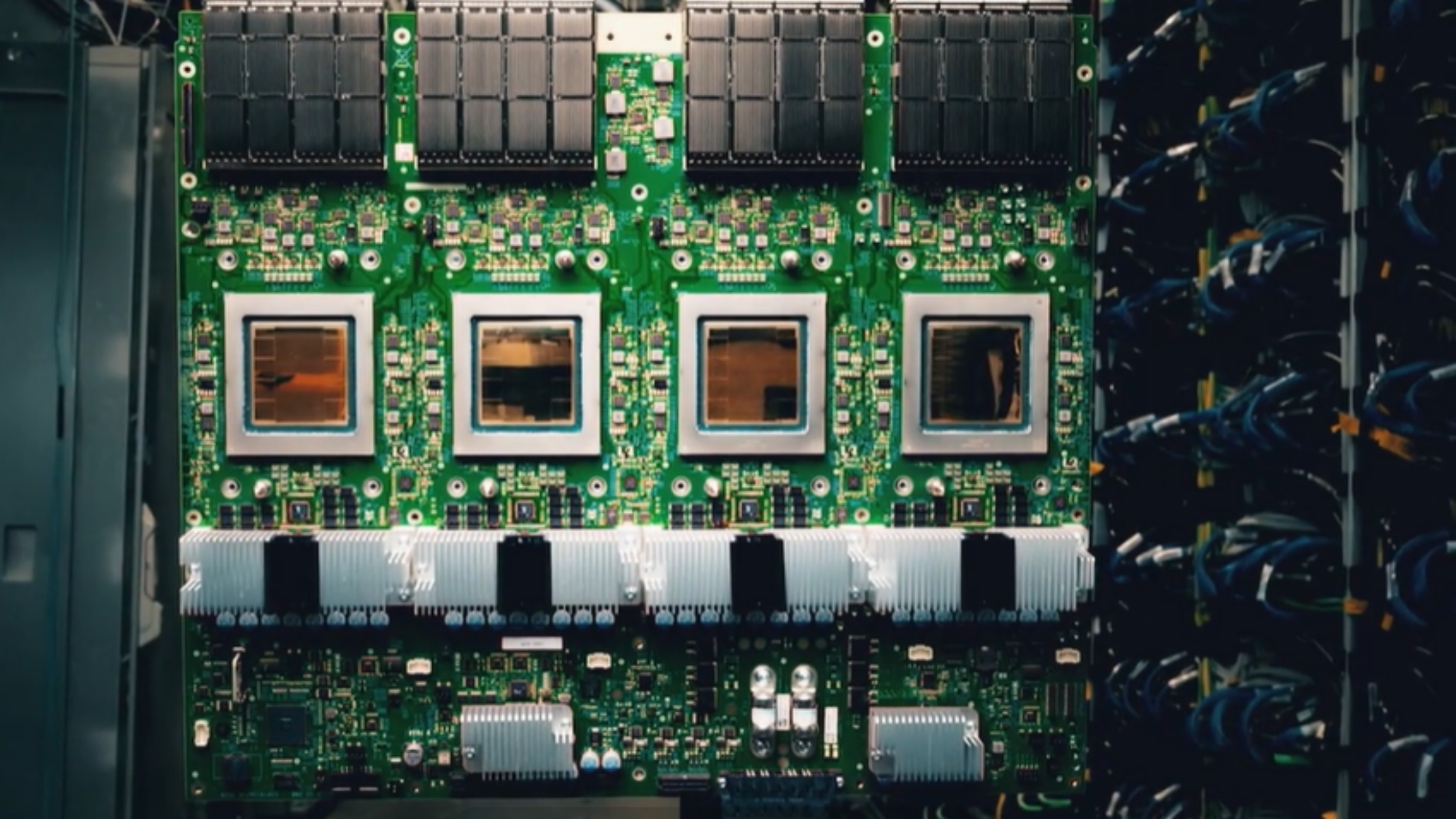

Google’s 7th-generation Tensor Processing Unit, codenamed Ironwood, is a powerful chip built to accelerate AI workloads. It represents a generational leap forward in both performance and efficiency for cloud-based AI.

Ironwood is especially important now because today’s AI systems—like chatbots, self-driving systems, and large-scale data processors—require massive, real-time inference power. Traditional hardware often struggles with latency or energy consumption. Ironwood solves both problems.

How Much Power Does Ironwood Actually Deliver?

Ironwood delivers unprecedented AI computing power:

- Each individual Ironwood chip offers 4,614 teraflops.

- A full TPU pod (9,216 chips) provides 42.5 exaflops—exceeding even top-tier global supercomputers.

This performance is specifically engineered for fast inference, where AI systems make rapid decisions and predictions, rather than just learning patterns.

7th-Gen TPU ‘Ironwood’ Capabilities

| Component | Specification |

|---|---|

| Peak Performance | 4,614 teraflops per chip |

| Pod Performance | 42.5 exaflops (9,216 chips) |

| Memory | 192 GB per chip (HBM) |

| Performance/Watt | 2x more efficient than Trillium |

| Target Use Case | AI Inference (Chatbots, Agents, etc) |

Why Ironwood Marks a Shift in AI Priorities

Previous generations of TPUs, like Trillium, focused primarily on training large AI models. However, the future is about inference—letting AI models respond to and interact with humans in real-time. Ironwood was built with this in mind, focusing on:

- Speed of response

- Energy-efficient processing

- Massive parallel scalability

Google calls this transition the “age of inference”, where latency, efficiency, and reliability matter more than ever before.

7th-Gen TPU ‘Ironwood’ vs Trillium TPU

To understand how significant Ironwood is, let’s compare it with its predecessor:

TPU Comparison Table

| Feature | Trillium (2023) | Ironwood (2025) |

|---|---|---|

| Peak Chip Performance | ~2,000 teraflops | 4,614 teraflops |

| Pod Capacity | ~10 exaflops | 42.5 exaflops |

| Memory per Chip | 32 GB HBM | 192 GB HBM |

| Energy Efficiency | Baseline | 2x Improvement |

| Optimization Focus | Model Training | Real-time Inference |

Ironwood does not just improve performance—it redefines priorities.

Real-World Applications: Where Ironwood Will Make a Difference

Ironwood TPUs will power a wide range of industries that rely on real-time, AI-powered decisions. These include:

- Conversational AI: Used in personal assistants, customer support, and voice recognition systems.

- Healthcare AI: Accelerating diagnostics and medical imaging.

- Financial AI: Real-time fraud detection and market prediction.

- Autonomous Systems: Robots and self-driving systems needing fast decisions.

This diversity shows that Ironwood is not just a tech advancement—it’s a catalyst for innovation across industries.

Architecture and Design Innovations in Ironwood

One of the key improvements in Ironwood is minimized data movement. This matters because data transfer across chips is a major bottleneck in traditional systems. By optimizing for on-chip memory access and latency-sensitive operations, Ironwood reduces wasted energy and speeds up computation.

Ironwood also supports Google’s Gemini models, Bard, and other LLMs that are increasingly part of AI infrastructure powering search, cloud tools, and assistant technologies.

Conclusion: Ironwood Isn’t Just an Upgrade — It’s a Paradigm Shift in AI

Google’s Ironwood TPU marks a shift from model training to real-time AI inference, making AI smarter, faster, and more accessible across industries. With 42.5 exaflops of power, 192 GB HBM per chip, and double the efficiency of its predecessor, Ironwood isn’t just more powerful—it’s precisely engineered for the AI-driven future.

For businesses, developers, and AI enthusiasts in the USA, keeping up with Ironwood is essential to understanding where cloud computing and artificial intelligence are heading.

[USnewsSphere.com / bg]